update repository references and improve script handling

9

arpspoof/CHANGELOG.md

Normal file

@@ -0,0 +1,9 @@

|

||||

- Implemented healthcheck

|

||||

- WARNING : update to supervisor 2022.11 before installing

|

||||

- Add codenotary sign

|

||||

- New standardized logic for Dockerfile build and packages installation

|

||||

|

||||

## 1.0.0 (07-12-2021)

|

||||

|

||||

- Update to latest version from t0mer/Arpspoof-Docker

|

||||

- Initial release

|

||||

131

arpspoof/Dockerfile

Normal file

@@ -0,0 +1,131 @@

|

||||

#============================#

|

||||

# ALEXBELGIUM'S DOCKERFILE #

|

||||

#============================#

|

||||

# _.------.

|

||||

# _.-` ('>.-`"""-.

|

||||

# '.--'` _'` _ .--.)

|

||||

# -' '-.-';` `

|

||||

# ' - _.' ``'--.

|

||||

# '---` .-'""`

|

||||

# /`

|

||||

#=== Home Assistant Addon ===#

|

||||

|

||||

#################

|

||||

# 1 Build Image #

|

||||

#################

|

||||

|

||||

ARG BUILD_FROM

|

||||

ARG BUILD_VERSION

|

||||

ARG BUILD_UPSTREAM="1.0.0"

|

||||

FROM ${BUILD_FROM}

|

||||

|

||||

##################

|

||||

# 2 Modify Image #

|

||||

##################

|

||||

|

||||

# Set S6 wait time

|

||||

ENV S6_CMD_WAIT_FOR_SERVICES=1 \

|

||||

S6_CMD_WAIT_FOR_SERVICES_MAXTIME=0 \

|

||||

S6_SERVICES_GRACETIME=0

|

||||

|

||||

##################

|

||||

# 3 Install apps #

|

||||

##################

|

||||

|

||||

# Add rootfs

|

||||

COPY rootfs/ /

|

||||

|

||||

# Uses /bin for compatibility purposes

|

||||

# hadolint ignore=DL4005

|

||||

RUN if [ ! -f /bin/sh ] && [ -f /usr/bin/sh ]; then ln -s /usr/bin/sh /bin/sh; fi && \

|

||||

if [ ! -f /bin/bash ] && [ -f /usr/bin/bash ]; then ln -s /usr/bin/bash /bin/bash; fi

|

||||

|

||||

# Modules

|

||||

ARG MODULES="00-banner.sh 01-custom_script.sh 00-global_var.sh"

|

||||

|

||||

# Automatic modules download

|

||||

ADD "https://raw.githubusercontent.com/alexbelgium/hassio-addons/master/.templates/ha_automodules.sh" "/ha_automodules.sh"

|

||||

RUN chmod 744 /ha_automodules.sh && /ha_automodules.sh "$MODULES" && rm /ha_automodules.sh

|

||||

|

||||

# Manual apps

|

||||

ENV PACKAGES="jq curl iproute2"

|

||||

|

||||

# Automatic apps & bashio

|

||||

ADD "https://raw.githubusercontent.com/alexbelgium/hassio-addons/master/.templates/ha_autoapps.sh" "/ha_autoapps.sh"

|

||||

RUN chmod 744 /ha_autoapps.sh && /ha_autoapps.sh "$PACKAGES" && rm /ha_autoapps.sh

|

||||

|

||||

################

|

||||

# 4 Entrypoint #

|

||||

################

|

||||

|

||||

# Add entrypoint

|

||||

ENV S6_STAGE2_HOOK=/ha_entrypoint.sh

|

||||

ADD "https://raw.githubusercontent.com/alexbelgium/hassio-addons/master/.templates/ha_entrypoint.sh" "/ha_entrypoint.sh"

|

||||

|

||||

# Entrypoint modifications

|

||||

ADD "https://raw.githubusercontent.com/alexbelgium/hassio-addons/master/.templates/ha_entrypoint_modif.sh" "/ha_entrypoint_modif.sh"

|

||||

RUN chmod 777 /ha_entrypoint.sh /ha_entrypoint_modif.sh && /ha_entrypoint_modif.sh && rm /ha_entrypoint_modif.sh

|

||||

|

||||

|

||||

ENTRYPOINT [ "/usr/bin/env" ]

|

||||

CMD [ "/ha_entrypoint.sh" ]

|

||||

|

||||

############

|

||||

# 5 Labels #

|

||||

############

|

||||

|

||||

ARG BUILD_ARCH

|

||||

ARG BUILD_DATE

|

||||

ARG BUILD_DESCRIPTION

|

||||

ARG BUILD_NAME

|

||||

ARG BUILD_REF

|

||||

ARG BUILD_REPOSITORY

|

||||

ARG BUILD_VERSION

|

||||

ENV BUILD_VERSION="${BUILD_VERSION}"

|

||||

LABEL \

|

||||

io.hass.name="${BUILD_NAME}" \

|

||||

io.hass.description="${BUILD_DESCRIPTION}" \

|

||||

io.hass.arch="${BUILD_ARCH}" \

|

||||

io.hass.type="addon" \

|

||||

io.hass.version=${BUILD_VERSION} \

|

||||

maintainer="alexbelgium (https://github.com/alexbelgium)" \

|

||||

org.opencontainers.image.title="${BUILD_NAME}" \

|

||||

org.opencontainers.image.description="${BUILD_DESCRIPTION}" \

|

||||

org.opencontainers.image.vendor="Home Assistant Add-ons" \

|

||||

org.opencontainers.image.authors="alexbelgium (https://github.com/alexbelgium)" \

|

||||

org.opencontainers.image.licenses="MIT" \

|

||||

org.opencontainers.image.url="https://github.com/alexbelgium" \

|

||||

org.opencontainers.image.source="https://github.com/${BUILD_REPOSITORY}" \

|

||||

org.opencontainers.image.documentation="https://github.com/${BUILD_REPOSITORY}/blob/main/README.md" \

|

||||

org.opencontainers.image.created=${BUILD_DATE} \

|

||||

org.opencontainers.image.revision=${BUILD_REF} \

|

||||

org.opencontainers.image.version=${BUILD_VERSION}

|

||||

|

||||

#################

|

||||

# 6 Healthcheck #

|

||||

#################

|

||||

|

||||

# Avoid spamming logs

|

||||

# hadolint ignore=SC2016

|

||||

RUN \

|

||||

# Handle Apache configuration

|

||||

if [ -d /etc/apache2/sites-available ]; then \

|

||||

for file in /etc/apache2/sites-*/*.conf; do \

|

||||

sed -i '/<VirtualHost/a \ \n # Match requests with the custom User-Agent "HealthCheck" \n SetEnvIf User-Agent "HealthCheck" dontlog \n # Exclude matching requests from access logs \n CustomLog ${APACHE_LOG_DIR}/access.log combined env=!dontlog' "$file"; \

|

||||

done; \

|

||||

fi && \

|

||||

\

|

||||

# Handle Nginx configuration

|

||||

if [ -f /etc/nginx/nginx.conf ]; then \

|

||||

awk '/http \{/{print; print "map $http_user_agent $dontlog {\n default 0;\n \"~*HealthCheck\" 1;\n}\naccess_log /var/log/nginx/access.log combined if=$dontlog;"; next}1' /etc/nginx/nginx.conf > /etc/nginx/nginx.conf.new && \

|

||||

mv /etc/nginx/nginx.conf.new /etc/nginx/nginx.conf; \

|

||||

fi

|

||||

|

||||

ENV HEALTH_PORT="7022" \

|

||||

HEALTH_URL=""

|

||||

HEALTHCHECK \

|

||||

--interval=5s \

|

||||

--retries=5 \

|

||||

--start-period=30s \

|

||||

--timeout=25s \

|

||||

CMD curl -A "HealthCheck: Docker/1.0" -s -f "http://127.0.0.1:${HEALTH_PORT}${HEALTH_URL}" &>/dev/null || exit 1

|

||||

76

arpspoof/README.md

Normal file

@@ -0,0 +1,76 @@

|

||||

# Home assistant add-on: Arpspoof

|

||||

|

||||

[![Donate][donation-badge]](https://www.buymeacoffee.com/alexbelgium)

|

||||

[![Donate][paypal-badge]](https://www.paypal.com/donate/?hosted_button_id=DZFULJZTP3UQA)

|

||||

|

||||

[donation-badge]: https://img.shields.io/badge/Buy%20me%20a%20coffee%20(no%20paypal)-%23d32f2f?logo=buy-me-a-coffee&style=flat&logoColor=white

|

||||

[paypal-badge]: https://img.shields.io/badge/Buy%20me%20a%20coffee%20with%20Paypal-0070BA?logo=paypal&style=flat&logoColor=white

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

[](https://www.codacy.com/gh/alexbelgium/hassio-addons/dashboard?utm_source=github.com&utm_medium=referral&utm_content=alexbelgium/hassio-addons&utm_campaign=Badge_Grade)

|

||||

[](https://github.com/alexbelgium/hassio-addons/actions/workflows/weekly-supelinter.yaml)

|

||||

[](https://github.com/alexbelgium/hassio-addons/actions/workflows/onpush_builder.yaml)

|

||||

|

||||

_Thanks to everyone having starred my repo! To star it click on the image below, then it will be on top right. Thanks!_

|

||||

|

||||

[](https://github.com/alexbelgium/hassio-addons/stargazers)

|

||||

|

||||

|

||||

|

||||

## About

|

||||

|

||||

[arpspoof](https://github.com/t0mer/Arpspoof-Docker) adds ability to block internet connection for local network devices

|

||||

This addon is based on the docker image https://hub.docker.com/r/techblog/arpspoof-docker

|

||||

|

||||

See all informations here : https://en.techblog.co.il/2021/03/15/home-assistant-cut-internet-connection-using-arpspoof/ , on in the upstream image documentation : https://github.com/t0mer/Arpspoof-Docker

|

||||

|

||||

## Installation

|

||||

|

||||

The installation of this add-on is pretty straightforward and not different in comparison to installing any other add-on.

|

||||

|

||||

1. Add my add-ons repository to your home assistant instance (in supervisor addons store at top right, or click button below if you have configured my HA)

|

||||

[](https://my.home-assistant.io/redirect/supervisor_add_addon_repository/?repository_url=https%3A%2F%2Fgithub.com%2Falexbelgium%2Fhassio-addons)

|

||||

1. Install this add-on.

|

||||

1. Click the `Save` button to store your configuration.

|

||||

1. Set the add-on options to your preferences

|

||||

1. Start the add-on.

|

||||

1. Check the logs of the add-on to see if everything went well.

|

||||

1. Open the webUI and adapt the software options

|

||||

|

||||

## Configuration

|

||||

|

||||

Webui can be found at <http://homeassistant:PORT>.

|

||||

|

||||

```yaml

|

||||

ROUTER_IP: 127.0.0.1 #Required Router IP

|

||||

INTERFACE_NAME: name #Required Interface name. Autofilled if empty.

|

||||

```

|

||||

|

||||

## Home-Assistant configuration

|

||||

|

||||

Description : [techblog](https://en.techblog.co.il/2021/03/15/home-assistant-cut-internet-connection-using-arpspoof/)

|

||||

|

||||

You can use a `command_line` switch to temporary disable a internet device in your network.

|

||||

|

||||

```yaml

|

||||

- platform: command_line

|

||||

switches:

|

||||

iphone_internet:

|

||||

friendly_name: "iPhone internet"

|

||||

command_off: "/usr/bin/curl -f -X GET http://{HA-IP}:7022/disconnect?ip={iPhoneIP}"

|

||||

command_on: "/usr/bin/curl -f -X GET http://{HA-IP}:7022/reconnect?ip={iPhoneIP}"

|

||||

command_state: "/usr/bin/curl -f -X GET http://{HA-IP}:7022/status?ip={iPhoneIP}"

|

||||

value_template: >

|

||||

{{ value != "1" }}

|

||||

```

|

||||

|

||||

## Support

|

||||

|

||||

Create an issue on github

|

||||

|

||||

## Illustration

|

||||

|

||||

No illustration

|

||||

10

arpspoof/build.json

Normal file

@@ -0,0 +1,10 @@

|

||||

{

|

||||

"build_from": {

|

||||

"aarch64": "techblog/arpspoof-docker:1.0.0",

|

||||

"amd64": "techblog/arpspoof-docker:1.0.0",

|

||||

"armv7": "techblog/arpspoof-docker:1.0.0"

|

||||

},

|

||||

"codenotary": {

|

||||

"signer": "alexandrep.github@gmail.com"

|

||||

}

|

||||

}

|

||||

BIN

arpspoof/icon.png

Normal file

|

After Width: | Height: | Size: 28 KiB |

BIN

arpspoof/logo.png

Normal file

|

After Width: | Height: | Size: 28 KiB |

16

arpspoof/rootfs/etc/cont-init.d/99-run.sh

Executable file

@@ -0,0 +1,16 @@

|

||||

#!/usr/bin/env bashio

|

||||

# shellcheck shell=bash

|

||||

set -e

|

||||

|

||||

# Avoid unbound variables

|

||||

set +u

|

||||

|

||||

# Autodefine if not defined

|

||||

if [ -n "$INTERFACE_NAME" ]; then

|

||||

# shellcheck disable=SC2155

|

||||

export INTERFACE_NAME="$(ip route get 8.8.8.8 | sed -nr 's/.*dev ([^\ ]+).*/\1/p')"

|

||||

bashio::log.blue "Autodetection : INTERFACE_NAME=$INTERFACE_NAME"

|

||||

fi

|

||||

|

||||

bashio::log.info "Starting..."

|

||||

/usr/bin/python3 /opt/arpspoof/arpspoof.py

|

||||

BIN

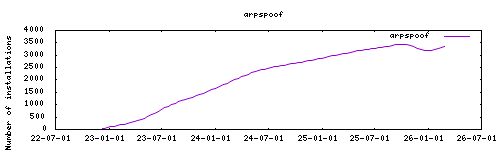

arpspoof/stats.png

Normal file

|

After Width: | Height: | Size: 2.1 KiB |

8

arpspoof/updater.json

Normal file

@@ -0,0 +1,8 @@

|

||||

{

|

||||

"last_update": "07-12-2021",

|

||||

"repository": "alexbelgium/hassio-addons",

|

||||

"slug": "arpspoof",

|

||||

"source": "github",

|

||||

"upstream_repo": "t0mer/Arpspoof-Docker",

|

||||

"upstream_version": "1.0.0"

|

||||

}

|

||||

1

beta/CHANGELOG.md

Executable file

@@ -0,0 +1 @@

|

||||

Please reference the [beta commits](https://github.com/jakowenko/double-take/commits/beta) for changes.

|

||||

1

beta/Dockerfile

Normal file

@@ -0,0 +1 @@

|

||||

FROM jakowenko/double-take:beta

|

||||

11

beta/README.md

Executable file

@@ -0,0 +1,11 @@

|

||||

[](https://github.com/jakowenko/double-take) [](https://github.com/jakowenko/double-take/stargazers) [](https://hub.docker.com/r/jakowenko/double-take) [](https://discord.gg/3pumsskdN5)

|

||||

|

||||

![amd64][amd64-shield]

|

||||

|

||||

# Double Take

|

||||

|

||||

Unified UI and API for processing and training images for facial recognition.

|

||||

|

||||

[Documentation](https://github.com/jakowenko/double-take/tree/beta#readme)

|

||||

|

||||

[amd64-shield]: https://img.shields.io/badge/amd64-yes-green.svg

|

||||

35

beta/config.json

Executable file

@@ -0,0 +1,35 @@

|

||||

{

|

||||

"name": "Double Take (beta)",

|

||||

"version": "1.13.1",

|

||||

"url": "https://github.com/jakowenko/double-take",

|

||||

"panel_icon": "mdi:face-recognition",

|

||||

"slug": "double-take-beta",

|

||||

"description": "Unified UI and API for processing and training images for facial recognition",

|

||||

"arch": ["amd64"],

|

||||

"startup": "application",

|

||||

"boot": "auto",

|

||||

"ingress": true,

|

||||

"ingress_port": 3000,

|

||||

"ports": {

|

||||

"3000/tcp": 3000

|

||||

},

|

||||

"ports_description": {

|

||||

"3000/tcp": "Web interface (not required for Home Assistant ingress)"

|

||||

},

|

||||

"map": ["media:rw", "config:rw"],

|

||||

"environment": {

|

||||

"HA_ADDON": "true"

|

||||

},

|

||||

"options": {

|

||||

"STORAGE_PATH": "/config/double-take",

|

||||

"CONFIG_PATH": "/config/double-take",

|

||||

"SECRETS_PATH": "/config",

|

||||

"MEDIA_PATH": "/media/double-take"

|

||||

},

|

||||

"schema": {

|

||||

"STORAGE_PATH": "str",

|

||||

"CONFIG_PATH": "str",

|

||||

"SECRETS_PATH": "str",

|

||||

"MEDIA_PATH": "str"

|

||||

}

|

||||

}

|

||||

BIN

beta/icon.png

Executable file

|

After Width: | Height: | Size: 6.3 KiB |

132

changedetection.io/CHANGELOG.md

Normal file

@@ -0,0 +1,132 @@

|

||||

|

||||

## 0.49.4 (15-03-2025)

|

||||

- Update to latest version from linuxserver/docker-changedetection.io (changelog : https://github.com/linuxserver/docker-changedetection.io/releases)

|

||||

|

||||

## 0.49.3 (01-03-2025)

|

||||

- Update to latest version from linuxserver/docker-changedetection.io (changelog : https://github.com/linuxserver/docker-changedetection.io/releases)

|

||||

|

||||

## 0.49.2 (21-02-2025)

|

||||

- Update to latest version from linuxserver/docker-changedetection.io (changelog : https://github.com/linuxserver/docker-changedetection.io/releases)

|

||||

|

||||

## 0.49.1 (15-02-2025)

|

||||

- Update to latest version from linuxserver/docker-changedetection.io (changelog : https://github.com/linuxserver/docker-changedetection.io/releases)

|

||||

|

||||

## 0.49.0 (25-01-2025)

|

||||

- Update to latest version from linuxserver/docker-changedetection.io (changelog : https://github.com/linuxserver/docker-changedetection.io/releases)

|

||||

|

||||

## 0.48.6 (11-01-2025)

|

||||

- Update to latest version from linuxserver/docker-changedetection.io (changelog : https://github.com/linuxserver/docker-changedetection.io/releases)

|

||||

|

||||

## 0.48.5 (28-12-2024)

|

||||

- Update to latest version from linuxserver/docker-changedetection.io (changelog : https://github.com/linuxserver/docker-changedetection.io/releases)

|

||||

|

||||

## 0.48.4 (21-12-2024)

|

||||

- Update to latest version from linuxserver/docker-changedetection.io (changelog : https://github.com/linuxserver/docker-changedetection.io/releases)

|

||||

|

||||

## 0.48.1 (07-12-2024)

|

||||

- Update to latest version from linuxserver/docker-changedetection.io (changelog : https://github.com/linuxserver/docker-changedetection.io/releases)

|

||||

|

||||

## 0.47.6 (09-11-2024)

|

||||

- Update to latest version from linuxserver/docker-changedetection.io (changelog : https://github.com/linuxserver/docker-changedetection.io/releases)

|

||||

|

||||

## 0.47.5 (02-11-2024)

|

||||

- Update to latest version from linuxserver/docker-changedetection.io (changelog : https://github.com/linuxserver/docker-changedetection.io/releases)

|

||||

|

||||

## 0.47.3 (12-10-2024)

|

||||

- Update to latest version from linuxserver/docker-changedetection.io (changelog : https://github.com/linuxserver/docker-changedetection.io/releases)

|

||||

|

||||

## 0.46.4 (07-09-2024)

|

||||

- Update to latest version from linuxserver/docker-changedetection.io (changelog : https://github.com/linuxserver/docker-changedetection.io/releases)

|

||||

|

||||

## 0.46.3 (24-08-2024)

|

||||

- Update to latest version from linuxserver/docker-changedetection.io (changelog : https://github.com/linuxserver/docker-changedetection.io/releases)

|

||||

|

||||

## 0.46.2 (03-08-2024)

|

||||

- Update to latest version from linuxserver/docker-changedetection.io (changelog : https://github.com/linuxserver/docker-changedetection.io/releases)

|

||||

## 0.46.1-2 (23-07-2024)

|

||||

- Minor bugs fixed

|

||||

|

||||

## 0.46.1 (20-07-2024)

|

||||

- Update to latest version from linuxserver/docker-changedetection.io (changelog : https://github.com/linuxserver/docker-changedetection.io/releases)

|

||||

|

||||

## 0.45.26 (13-07-2024)

|

||||

- Update to latest version from linuxserver/docker-changedetection.io (changelog : https://github.com/linuxserver/docker-changedetection.io/releases)

|

||||

## 0.45.25-2 (08-07-2024)

|

||||

- Minor bugs fixed

|

||||

|

||||

## 0.45.25 (06-07-2024)

|

||||

- Update to latest version from linuxserver/docker-changedetection.io (changelog : https://github.com/linuxserver/docker-changedetection.io/releases)

|

||||

|

||||

## 0.45.24 (22-06-2024)

|

||||

- Update to latest version from linuxserver/docker-changedetection.io (changelog : https://github.com/linuxserver/docker-changedetection.io/releases)

|

||||

|

||||

## 0.45.23 (25-05-2024)

|

||||

- Update to latest version from linuxserver/docker-changedetection.io (changelog : https://github.com/linuxserver/docker-changedetection.io/releases)

|

||||

## 0.45.22-2 (21-05-2024)

|

||||

- Minor bugs fixed

|

||||

|

||||

## 0.45.22 (04-05-2024)

|

||||

- Update to latest version from linuxserver/docker-changedetection.io (changelog : https://github.com/linuxserver/docker-changedetection.io/releases)

|

||||

|

||||

## 0.45.21 (27-04-2024)

|

||||

- Update to latest version from linuxserver/docker-changedetection.io (changelog : https://github.com/linuxserver/docker-changedetection.io/releases)

|

||||

|

||||

## 0.45.20 (20-04-2024)

|

||||

- Update to latest version from linuxserver/docker-changedetection.io (changelog : https://github.com/linuxserver/docker-changedetection.io/releases)

|

||||

|

||||

## 0.45.17 (06-04-2024)

|

||||

- Update to latest version from linuxserver/docker-changedetection.io (changelog : https://github.com/linuxserver/docker-changedetection.io/releases)

|

||||

|

||||

## 0.45.16 (09-03-2024)

|

||||

|

||||

- Update to latest version from linuxserver/docker-changedetection.io

|

||||

|

||||

## 0.45.14 (10-02-2024)

|

||||

|

||||

- Update to latest version from linuxserver/docker-changedetection.io

|

||||

|

||||

## 0.45.13 (20-01-2024)

|

||||

|

||||

- Update to latest version from linuxserver/docker-changedetection.io

|

||||

|

||||

## 0.45.12 (06-01-2024)

|

||||

|

||||

- Update to latest version from linuxserver/docker-changedetection.io

|

||||

|

||||

## 0.45.9 (23-12-2023)

|

||||

|

||||

- Update to latest version from linuxserver/docker-changedetection.io

|

||||

|

||||

## 0.45.8.1 (02-12-2023)

|

||||

|

||||

- Update to latest version from linuxserver/docker-changedetection.io

|

||||

## 0.45.7.3-2 (21-11-2023)

|

||||

|

||||

- Minor bugs fixed

|

||||

|

||||

## 0.45.7.3 (18-11-2023)

|

||||

|

||||

- Update to latest version from linuxserver/docker-changedetection.io

|

||||

|

||||

## 0.45.7 (11-11-2023)

|

||||

|

||||

- Update to latest version from linuxserver/docker-changedetection.io

|

||||

|

||||

## 0.45.5 (04-11-2023)

|

||||

|

||||

- Update to latest version from linuxserver/docker-changedetection.io

|

||||

## 0.45.3-2 (01-11-2023)

|

||||

|

||||

- Minor bugs fixed

|

||||

|

||||

## 0.45.3 (07-10-2023)

|

||||

|

||||

- Update to latest version from linuxserver/docker-changedetection.io

|

||||

|

||||

## 0.45.2 (23-09-2023)

|

||||

|

||||

- Update to latest version from linuxserver/docker-changedetection.io

|

||||

|

||||

## 0.45.1 (10-09-2023)

|

||||

|

||||

- Initial build

|

||||

85

changedetection.io/README.md

Normal file

@@ -0,0 +1,85 @@

|

||||

# Home assistant add-on: changedetection.io

|

||||

|

||||

[![Donate][donation-badge]](https://www.buymeacoffee.com/alexbelgium)

|

||||

[![Donate][paypal-badge]](https://www.paypal.com/donate/?hosted_button_id=DZFULJZTP3UQA)

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

[](https://www.codacy.com/gh/alexbelgium/hassio-addons/dashboard?utm_source=github.com&utm_medium=referral&utm_content=alexbelgium/hassio-addons&utm_campaign=Badge_Grade)

|

||||

[](https://github.com/alexbelgium/hassio-addons/actions/workflows/weekly-supelinter.yaml)

|

||||

[](https://github.com/alexbelgium/hassio-addons/actions/workflows/onpush_builder.yaml)

|

||||

|

||||

[donation-badge]: https://img.shields.io/badge/Buy%20me%20a%20coffee%20(no%20paypal)-%23d32f2f?logo=buy-me-a-coffee&style=flat&logoColor=white

|

||||

[paypal-badge]: https://img.shields.io/badge/Buy%20me%20a%20coffee%20with%20Paypal-0070BA?logo=paypal&style=flat&logoColor=white

|

||||

|

||||

_Thanks to everyone having starred my repo! To star it click on the image below, then it will be on top right. Thanks!_

|

||||

|

||||

[](https://github.com/alexbelgium/hassio-addons/stargazers)

|

||||

|

||||

|

||||

|

||||

## About

|

||||

|

||||

[Changedetection.io](https://github.com/dgtlmoon/changedetection.io) provides free, open-source web page monitoring, notification and change detection.

|

||||

|

||||

This addon is based on the [docker image](https://github.com/linuxserver/docker-changedetection.io) from linuxserver.io.

|

||||

|

||||

## Configuration

|

||||

|

||||

### Main app

|

||||

|

||||

Web UI can be found at `<your-ip>:5000`, also accessible from the add-on page.

|

||||

|

||||

#### Sidebar shortcut

|

||||

|

||||

You can add a shortcut pointing to your Changedetection.io instance with the following steps:

|

||||

1. Go to <kbd>⚙ Settings</kbd> > <kbd>Dashboards</kbd>

|

||||

2. Click <kbd>➕ Add Dashboard</kbd> at the bottom corner

|

||||

3. Select the <kbd>Webpage</kbd> option, and paste the Web UI URL you got from the add-on page.

|

||||

4. Fill in the title for the sidebar item, an icon (suggestion: `mdi:vector-difference`), and a **relative URL** for that panel (e.g. `change-detection`). Lastly, confirm it.

|

||||

|

||||

### Configurable options

|

||||

|

||||

```yaml

|

||||

PGID: user

|

||||

GPID: user

|

||||

TZ: Etc/UTC specify a timezone to use, see https://en.wikipedia.org/wiki/List_of_tz_database_time_zones#List

|

||||

BASE_URL: Specify the full URL (including protocol) when running behind a reverse proxy

|

||||

```

|

||||

|

||||

### Connect to browserless Chrome (from @RhysMcW)

|

||||

|

||||

In HA, use the File Editor add-on (or Filebrowser) and edit the Changedetection.io config file at `/homeassistant/addons_config/changedetection.io/config.yaml`.

|

||||

|

||||

Add the following line to the end of it:

|

||||

```yaml

|

||||

PLAYWRIGHT_DRIVER_URL: ws://2937404c-browserless-chrome:3000/chromium?launch={"defaultViewport":{"height":720,"width":1280},"headless":false,"stealth":true}&blockAds=true

|

||||

```

|

||||

|

||||

Remember to add a blank line at the end of the file too according to yaml requirements.

|

||||

|

||||

The `2937404c-browserless-chrome` hostname is displayed in the UI, on the Browserless Chromium addon page:

|

||||

|

||||

|

||||

You can also fetch it:

|

||||

* By using SSH and running `docker exec -i hassio_dns cat "/config/hosts"`

|

||||

* From the CLI in HA, using arp

|

||||

* You should also be able to use your HA IP address.

|

||||

|

||||

Then restart the Changedetection.io add-on - after that you can use the browser options in Changedetection.io.

|

||||

|

||||

## Installation

|

||||

|

||||

The installation of this add-on is pretty straightforward and not different in

|

||||

comparison to installing any other Hass.io add-on.

|

||||

|

||||

1. [Add my Hass.io add-ons repository][repository] to your Hass.io instance.

|

||||

1. Install this add-on.

|

||||

1. Click the `Save` button to store your configuration.

|

||||

1. Start the add-on.

|

||||

1. Check the logs of the add-on to see if everything went well.

|

||||

1. Carefully configure the add-on to your preferences, see the official documentation for for that.

|

||||

|

||||

[repository]: https://github.com/alexbelgium/hassio-addons

|

||||

67

changedetection.io/apparmor.txt

Normal file

@@ -0,0 +1,67 @@

|

||||

#include <tunables/global>

|

||||

|

||||

profile addon_db21ed7f_changedetection.io_nas flags=(attach_disconnected,mediate_deleted) {

|

||||

#include <abstractions/base>

|

||||

|

||||

capability,

|

||||

file,

|

||||

signal,

|

||||

mount,

|

||||

umount,

|

||||

remount,

|

||||

network udp,

|

||||

network tcp,

|

||||

network dgram,

|

||||

network stream,

|

||||

network inet,

|

||||

network inet6,

|

||||

network netlink raw,

|

||||

network unix dgram,

|

||||

|

||||

capability setgid,

|

||||

capability setuid,

|

||||

capability sys_admin,

|

||||

capability dac_read_search,

|

||||

# capability dac_override,

|

||||

# capability sys_rawio,

|

||||

|

||||

# S6-Overlay

|

||||

/init ix,

|

||||

/run/{s6,s6-rc*,service}/** ix,

|

||||

/package/** ix,

|

||||

/command/** ix,

|

||||

/run/{,**} rwk,

|

||||

/dev/tty rw,

|

||||

/bin/** ix,

|

||||

/usr/bin/** ix,

|

||||

/usr/lib/bashio/** ix,

|

||||

/etc/s6/** rix,

|

||||

/run/s6/** rix,

|

||||

/etc/services.d/** rwix,

|

||||

/etc/cont-init.d/** rwix,

|

||||

/etc/cont-finish.d/** rwix,

|

||||

/init rix,

|

||||

/var/run/** mrwkl,

|

||||

/var/run/ mrwkl,

|

||||

/dev/i2c-1 mrwkl,

|

||||

# Files required

|

||||

/dev/fuse mrwkl,

|

||||

/dev/sda1 mrwkl,

|

||||

/dev/sdb1 mrwkl,

|

||||

/dev/nvme0 mrwkl,

|

||||

/dev/nvme1 mrwkl,

|

||||

/dev/mmcblk0p1 mrwkl,

|

||||

/dev/ttyUSB0 mrwkl,

|

||||

/dev/* mrwkl,

|

||||

/tmp/** mrkwl,

|

||||

|

||||

# Data access

|

||||

/data/** rw,

|

||||

|

||||

# suppress ptrace denials when using 'docker ps' or using 'ps' inside a container

|

||||

ptrace (trace,read) peer=docker-default,

|

||||

|

||||

# docker daemon confinement requires explict allow rule for signal

|

||||

signal (receive) set=(kill,term) peer=/usr/bin/docker,

|

||||

|

||||

}

|

||||

9

changedetection.io/build.json

Normal file

@@ -0,0 +1,9 @@

|

||||

{

|

||||

"build_from": {

|

||||

"aarch64": "lscr.io/linuxserver/changedetection.io:arm64v8-latest",

|

||||

"amd64": "lscr.io/linuxserver/changedetection.io:amd64-latest"

|

||||

},

|

||||

"codenotary": {

|

||||

"signer": "alexandrep.github@gmail.com"

|

||||

}

|

||||

}

|

||||

41

changedetection.io/config.json

Normal file

@@ -0,0 +1,41 @@

|

||||

{

|

||||

"arch": [

|

||||

"aarch64",

|

||||

"amd64"

|

||||

],

|

||||

"codenotary": "alexandrep.github@gmail.com",

|

||||

"description": "web page monitoring, notification and change detection",

|

||||

"environment": {

|

||||

"LC_ALL": "en_US.UTF-8",

|

||||

"TIMEOUT": "60000"

|

||||

},

|

||||

"image": "ghcr.io/alexbelgium/changedetection.io-{arch}",

|

||||

"init": false,

|

||||

"map": [

|

||||

"config:rw"

|

||||

],

|

||||

"name": "Changedetection.io",

|

||||

"options": {

|

||||

"PGID": 0,

|

||||

"PUID": 0,

|

||||

"TIMEOUT": "60000"

|

||||

},

|

||||

"ports": {

|

||||

"5000/tcp": 5000

|

||||

},

|

||||

"ports_description": {

|

||||

"5000/tcp": "Webui"

|

||||

},

|

||||

"schema": {

|

||||

"BASE_URL": "str?",

|

||||

"PGID": "int",

|

||||

"PUID": "int",

|

||||

"TIMEOUT": "int",

|

||||

"TZ": "str?"

|

||||

},

|

||||

"slug": "changedetection.io",

|

||||

"udev": true,

|

||||

"url": "https://github.com/alexbelgium/hassio-addons/tree/master/changedetection.io",

|

||||

"version": "0.49.4",

|

||||

"webui": "http://[HOST]:[PORT:5000]"

|

||||

}

|

||||

BIN

changedetection.io/icon.png

Normal file

|

After Width: | Height: | Size: 24 KiB |

BIN

changedetection.io/logo.png

Normal file

|

After Width: | Height: | Size: 24 KiB |

0

changedetection.io/rootfs/blank

Normal file

15

changedetection.io/rootfs/etc/cont-init.d/21-folders.sh

Executable file

@@ -0,0 +1,15 @@

|

||||

#!/usr/bin/with-contenv bashio

|

||||

# shellcheck shell=bash

|

||||

set -e

|

||||

|

||||

# Define user

|

||||

PUID=$(bashio::config "PUID")

|

||||

PGID=$(bashio::config "PGID")

|

||||

|

||||

# Check data location

|

||||

LOCATION="/config/addons_config/changedetection.io"

|

||||

|

||||

# Check structure

|

||||

mkdir -p "$LOCATION"

|

||||

chown -R "$PUID":"$PGID" "$LOCATION"

|

||||

chmod -R 755 "$LOCATION"

|

||||

BIN

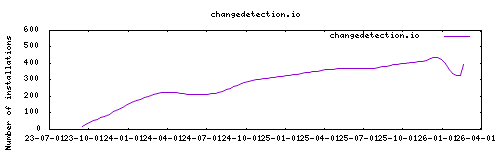

changedetection.io/stats.png

Normal file

|

After Width: | Height: | Size: 2.3 KiB |

9

changedetection.io/updater.json

Normal file

@@ -0,0 +1,9 @@

|

||||

{

|

||||

"github_fulltag": "false",

|

||||

"last_update": "15-03-2025",

|

||||

"repository": "alexbelgium/hassio-addons",

|

||||

"slug": "changedetection.io",

|

||||

"source": "github",

|

||||

"upstream_repo": "linuxserver/docker-changedetection.io",

|

||||

"upstream_version": "0.49.4"

|

||||

}

|

||||

1

compreface/CHANGELOG.md

Executable file

@@ -0,0 +1 @@

|

||||

Please reference the [release notes](https://github.com/exadel-inc/CompreFace/releases) for changes.

|

||||

6

compreface/Dockerfile

Executable file

@@ -0,0 +1,6 @@

|

||||

FROM exadel/compreface:1.1.0

|

||||

ENV PGDATA=/data/database

|

||||

RUN apt-get update && apt-get install jq -y && rm -rf /var/lib/apt/lists/*

|

||||

COPY postgresql.conf /etc/postgresql/13/main/postgresql.conf

|

||||

COPY run.sh /

|

||||

CMD ["/run.sh"]

|

||||

7

compreface/README.md

Executable file

@@ -0,0 +1,7 @@

|

||||

# Exadel CompreFace

|

||||

|

||||

This add-on runs the [single container](https://github.com/exadel-inc/CompreFace/issues/651) version of CompreFace.

|

||||

|

||||

CompreFace will be exposed on port 8000 - you can change this in the add-on configuration if another port is required.

|

||||

|

||||

[Documentation](https://github.com/exadel-inc/CompreFace#readme)

|

||||

30

compreface/config.json

Executable file

@@ -0,0 +1,30 @@

|

||||

{

|

||||

"name": "Exadel CompreFace",

|

||||

"version": "1.1.0",

|

||||

"url": "https://github.com/exadel-inc/CompreFace",

|

||||

"slug": "compreface",

|

||||

"description": "Exadel CompreFace is a leading free and open-source face recognition system",

|

||||

"arch": ["amd64"],

|

||||

"startup": "application",

|

||||

"boot": "auto",

|

||||

"ports": {

|

||||

"80/tcp": 8000

|

||||

},

|

||||

"ports_description": {

|

||||

"80/tcp": "UI/API"

|

||||

},

|

||||

"options": {

|

||||

"POSTGRES_URL": "jdbc:postgresql://localhost:5432/frs",

|

||||

"POSTGRES_USER": "compreface",

|

||||

"POSTGRES_PASSWORD": "M7yfTsBscdqvZs49",

|

||||

"POSTGRES_DB": "frs",

|

||||

"API_JAVA_OPTS": "-Xmx1g"

|

||||

},

|

||||

"schema": {

|

||||

"POSTGRES_URL": "str",

|

||||

"POSTGRES_USER": "str",

|

||||

"POSTGRES_PASSWORD": "str",

|

||||

"POSTGRES_DB": "str",

|

||||

"API_JAVA_OPTS": "str"

|

||||

}

|

||||

}

|

||||

BIN

compreface/icon.png

Executable file

|

After Width: | Height: | Size: 39 KiB |

785

compreface/postgresql.conf

Executable file

@@ -0,0 +1,785 @@

|

||||

# CompreFace changes:

|

||||

# 1. Changed `data_directory`, so it will always link to `/var/lib/postgresql/data` and do not depend on postgres version.

|

||||

|

||||

# -----------------------------

|

||||

# PostgreSQL configuration file

|

||||

# -----------------------------

|

||||

#

|

||||

# This file consists of lines of the form:

|

||||

#

|

||||

# name = value

|

||||

#

|

||||

# (The "=" is optional.) Whitespace may be used. Comments are introduced with

|

||||

# "#" anywhere on a line. The complete list of parameter names and allowed

|

||||

# values can be found in the PostgreSQL documentation.

|

||||

#

|

||||

# The commented-out settings shown in this file represent the default values.

|

||||

# Re-commenting a setting is NOT sufficient to revert it to the default value;

|

||||

# you need to reload the server.

|

||||

#

|

||||

# This file is read on server startup and when the server receives a SIGHUP

|

||||

# signal. If you edit the file on a running system, you have to SIGHUP the

|

||||

# server for the changes to take effect, run "pg_ctl reload", or execute

|

||||

# "SELECT pg_reload_conf()". Some parameters, which are marked below,

|

||||

# require a server shutdown and restart to take effect.

|

||||

#

|

||||

# Any parameter can also be given as a command-line option to the server, e.g.,

|

||||

# "postgres -c log_connections=on". Some parameters can be changed at run time

|

||||

# with the "SET" SQL command.

|

||||

#

|

||||

# Memory units: B = bytes Time units: us = microseconds

|

||||

# kB = kilobytes ms = milliseconds

|

||||

# MB = megabytes s = seconds

|

||||

# GB = gigabytes min = minutes

|

||||

# TB = terabytes h = hours

|

||||

# d = days

|

||||

|

||||

|

||||

#------------------------------------------------------------------------------

|

||||

# FILE LOCATIONS

|

||||

#------------------------------------------------------------------------------

|

||||

|

||||

# The default values of these variables are driven from the -D command-line

|

||||

# option or PGDATA environment variable, represented here as ConfigDir.

|

||||

|

||||

data_directory = '/data/database' # use data in another directory

|

||||

# (change requires restart)

|

||||

hba_file = '/etc/postgresql/13/main/pg_hba.conf' # host-based authentication file

|

||||

# (change requires restart)

|

||||

ident_file = '/etc/postgresql/13/main/pg_ident.conf' # ident configuration file

|

||||

# (change requires restart)

|

||||

|

||||

# If external_pid_file is not explicitly set, no extra PID file is written.

|

||||

external_pid_file = '/var/run/postgresql/13-main.pid' # write an extra PID file

|

||||

# (change requires restart)

|

||||

|

||||

|

||||

#------------------------------------------------------------------------------

|

||||

# CONNECTIONS AND AUTHENTICATION

|

||||

#------------------------------------------------------------------------------

|

||||

|

||||

# - Connection Settings -

|

||||

|

||||

#listen_addresses = 'localhost' # what IP address(es) to listen on;

|

||||

# comma-separated list of addresses;

|

||||

# defaults to 'localhost'; use '*' for all

|

||||

# (change requires restart)

|

||||

port = 5432 # (change requires restart)

|

||||

max_connections = 100 # (change requires restart)

|

||||

#superuser_reserved_connections = 3 # (change requires restart)

|

||||

unix_socket_directories = '/var/run/postgresql' # comma-separated list of directories

|

||||

# (change requires restart)

|

||||

#unix_socket_group = '' # (change requires restart)

|

||||

#unix_socket_permissions = 0777 # begin with 0 to use octal notation

|

||||

# (change requires restart)

|

||||

#bonjour = off # advertise server via Bonjour

|

||||

# (change requires restart)

|

||||

#bonjour_name = '' # defaults to the computer name

|

||||

# (change requires restart)

|

||||

|

||||

# - TCP settings -

|

||||

# see "man tcp" for details

|

||||

|

||||

#tcp_keepalives_idle = 0 # TCP_KEEPIDLE, in seconds;

|

||||

# 0 selects the system default

|

||||

#tcp_keepalives_interval = 0 # TCP_KEEPINTVL, in seconds;

|

||||

# 0 selects the system default

|

||||

#tcp_keepalives_count = 0 # TCP_KEEPCNT;

|

||||

# 0 selects the system default

|

||||

#tcp_user_timeout = 0 # TCP_USER_TIMEOUT, in milliseconds;

|

||||

# 0 selects the system default

|

||||

|

||||

# - Authentication -

|

||||

|

||||

#authentication_timeout = 1min # 1s-600s

|

||||

#password_encryption = md5 # md5 or scram-sha-256

|

||||

#db_user_namespace = off

|

||||

|

||||

# GSSAPI using Kerberos

|

||||

#krb_server_keyfile = 'FILE:${sysconfdir}/krb5.keytab'

|

||||

#krb_caseins_users = off

|

||||

|

||||

# - SSL -

|

||||

|

||||

ssl = on

|

||||

#ssl_ca_file = ''

|

||||

ssl_cert_file = '/etc/ssl/certs/ssl-cert-snakeoil.pem'

|

||||

#ssl_crl_file = ''

|

||||

ssl_key_file = '/etc/ssl/private/ssl-cert-snakeoil.key'

|

||||

#ssl_ciphers = 'HIGH:MEDIUM:+3DES:!aNULL' # allowed SSL ciphers

|

||||

#ssl_prefer_server_ciphers = on

|

||||

#ssl_ecdh_curve = 'prime256v1'

|

||||

#ssl_min_protocol_version = 'TLSv1.2'

|

||||

#ssl_max_protocol_version = ''

|

||||

#ssl_dh_params_file = ''

|

||||

#ssl_passphrase_command = ''

|

||||

#ssl_passphrase_command_supports_reload = off

|

||||

|

||||

|

||||

#------------------------------------------------------------------------------

|

||||

# RESOURCE USAGE (except WAL)

|

||||

#------------------------------------------------------------------------------

|

||||

|

||||

# - Memory -

|

||||

|

||||

shared_buffers = 128MB # min 128kB

|

||||

# (change requires restart)

|

||||

#huge_pages = try # on, off, or try

|

||||

# (change requires restart)

|

||||

#temp_buffers = 8MB # min 800kB

|

||||

#max_prepared_transactions = 0 # zero disables the feature

|

||||

# (change requires restart)

|

||||

# Caution: it is not advisable to set max_prepared_transactions nonzero unless

|

||||

# you actively intend to use prepared transactions.

|

||||

#work_mem = 4MB # min 64kB

|

||||

#hash_mem_multiplier = 1.0 # 1-1000.0 multiplier on hash table work_mem

|

||||

#maintenance_work_mem = 64MB # min 1MB

|

||||

#autovacuum_work_mem = -1 # min 1MB, or -1 to use maintenance_work_mem

|

||||

#logical_decoding_work_mem = 64MB # min 64kB

|

||||

#max_stack_depth = 2MB # min 100kB

|

||||

#shared_memory_type = mmap # the default is the first option

|

||||

# supported by the operating system:

|

||||

# mmap

|

||||

# sysv

|

||||

# windows

|

||||

# (change requires restart)

|

||||

dynamic_shared_memory_type = posix # the default is the first option

|

||||

# supported by the operating system:

|

||||

# posix

|

||||

# sysv

|

||||

# windows

|

||||

# mmap

|

||||

# (change requires restart)

|

||||

|

||||

# - Disk -

|

||||

|

||||

#temp_file_limit = -1 # limits per-process temp file space

|

||||

# in kilobytes, or -1 for no limit

|

||||

|

||||

# - Kernel Resources -

|

||||

|

||||

#max_files_per_process = 1000 # min 64

|

||||

# (change requires restart)

|

||||

|

||||

# - Cost-Based Vacuum Delay -

|

||||

|

||||

#vacuum_cost_delay = 0 # 0-100 milliseconds (0 disables)

|

||||

#vacuum_cost_page_hit = 1 # 0-10000 credits

|

||||

#vacuum_cost_page_miss = 10 # 0-10000 credits

|

||||

#vacuum_cost_page_dirty = 20 # 0-10000 credits

|

||||

#vacuum_cost_limit = 200 # 1-10000 credits

|

||||

|

||||

# - Background Writer -

|

||||

|

||||

#bgwriter_delay = 200ms # 10-10000ms between rounds

|

||||

#bgwriter_lru_maxpages = 100 # max buffers written/round, 0 disables

|

||||

#bgwriter_lru_multiplier = 2.0 # 0-10.0 multiplier on buffers scanned/round

|

||||

#bgwriter_flush_after = 512kB # measured in pages, 0 disables

|

||||

|

||||

# - Asynchronous Behavior -

|

||||

|

||||

#effective_io_concurrency = 1 # 1-1000; 0 disables prefetching

|

||||

#maintenance_io_concurrency = 10 # 1-1000; 0 disables prefetching

|

||||

#max_worker_processes = 8 # (change requires restart)

|

||||

#max_parallel_maintenance_workers = 2 # taken from max_parallel_workers

|

||||

#max_parallel_workers_per_gather = 2 # taken from max_parallel_workers

|

||||

#parallel_leader_participation = on

|

||||

#max_parallel_workers = 8 # maximum number of max_worker_processes that

|

||||

# can be used in parallel operations

|

||||

#old_snapshot_threshold = -1 # 1min-60d; -1 disables; 0 is immediate

|

||||

# (change requires restart)

|

||||

#backend_flush_after = 0 # measured in pages, 0 disables

|

||||

|

||||

|

||||

#------------------------------------------------------------------------------

|

||||

# WRITE-AHEAD LOG

|

||||

#------------------------------------------------------------------------------

|

||||

|

||||

# - Settings -

|

||||

|

||||

#wal_level = replica # minimal, replica, or logical

|

||||

# (change requires restart)

|

||||

#fsync = on # flush data to disk for crash safety

|

||||

# (turning this off can cause

|

||||

# unrecoverable data corruption)

|

||||

#synchronous_commit = on # synchronization level;

|

||||

# off, local, remote_write, remote_apply, or on

|

||||

#wal_sync_method = fsync # the default is the first option

|

||||

# supported by the operating system:

|

||||

# open_datasync

|

||||

# fdatasync (default on Linux and FreeBSD)

|

||||

# fsync

|

||||

# fsync_writethrough

|

||||

# open_sync

|

||||

#full_page_writes = on # recover from partial page writes

|

||||

#wal_compression = off # enable compression of full-page writes

|

||||

#wal_log_hints = off # also do full page writes of non-critical updates

|

||||

# (change requires restart)

|

||||

#wal_init_zero = on # zero-fill new WAL files

|

||||

#wal_recycle = on # recycle WAL files

|

||||

#wal_buffers = -1 # min 32kB, -1 sets based on shared_buffers

|

||||

# (change requires restart)

|

||||

#wal_writer_delay = 200ms # 1-10000 milliseconds

|

||||

#wal_writer_flush_after = 1MB # measured in pages, 0 disables

|

||||

#wal_skip_threshold = 2MB

|

||||

|

||||

#commit_delay = 0 # range 0-100000, in microseconds

|

||||

#commit_siblings = 5 # range 1-1000

|

||||

|

||||

# - Checkpoints -

|

||||

|

||||

#checkpoint_timeout = 5min # range 30s-1d

|

||||

max_wal_size = 1GB

|

||||

min_wal_size = 80MB

|

||||

#checkpoint_completion_target = 0.5 # checkpoint target duration, 0.0 - 1.0

|

||||

#checkpoint_flush_after = 256kB # measured in pages, 0 disables

|

||||

#checkpoint_warning = 30s # 0 disables

|

||||

|

||||

# - Archiving -

|

||||

|

||||

#archive_mode = off # enables archiving; off, on, or always

|

||||

# (change requires restart)

|

||||

#archive_command = '' # command to use to archive a logfile segment

|

||||

# placeholders: %p = path of file to archive

|

||||

# %f = file name only

|

||||

# e.g. 'test ! -f /mnt/server/archivedir/%f && cp %p /mnt/server/archivedir/%f'

|

||||

#archive_timeout = 0 # force a logfile segment switch after this

|

||||

# number of seconds; 0 disables

|

||||

|

||||

# - Archive Recovery -

|

||||

|

||||

# These are only used in recovery mode.

|

||||

|

||||

#restore_command = '' # command to use to restore an archived logfile segment

|

||||

# placeholders: %p = path of file to restore

|

||||

# %f = file name only

|

||||

# e.g. 'cp /mnt/server/archivedir/%f %p'

|

||||

# (change requires restart)

|

||||

#archive_cleanup_command = '' # command to execute at every restartpoint

|

||||

#recovery_end_command = '' # command to execute at completion of recovery

|

||||

|

||||

# - Recovery Target -

|

||||

|

||||

# Set these only when performing a targeted recovery.

|

||||

|

||||

#recovery_target = '' # 'immediate' to end recovery as soon as a

|

||||

# consistent state is reached

|

||||

# (change requires restart)

|

||||

#recovery_target_name = '' # the named restore point to which recovery will proceed

|

||||

# (change requires restart)

|

||||

#recovery_target_time = '' # the time stamp up to which recovery will proceed

|

||||

# (change requires restart)

|

||||

#recovery_target_xid = '' # the transaction ID up to which recovery will proceed

|

||||

# (change requires restart)

|

||||

#recovery_target_lsn = '' # the WAL LSN up to which recovery will proceed

|

||||

# (change requires restart)

|

||||

#recovery_target_inclusive = on # Specifies whether to stop:

|

||||

# just after the specified recovery target (on)

|

||||

# just before the recovery target (off)

|

||||

# (change requires restart)

|

||||

#recovery_target_timeline = 'latest' # 'current', 'latest', or timeline ID

|

||||

# (change requires restart)

|

||||

#recovery_target_action = 'pause' # 'pause', 'promote', 'shutdown'

|

||||

# (change requires restart)

|

||||

|

||||

|

||||

#------------------------------------------------------------------------------

|

||||

# REPLICATION

|

||||

#------------------------------------------------------------------------------

|

||||

|

||||

# - Sending Servers -

|

||||

|

||||

# Set these on the master and on any standby that will send replication data.

|

||||

|

||||

#max_wal_senders = 10 # max number of walsender processes

|

||||

# (change requires restart)

|

||||

#wal_keep_size = 0 # in megabytes; 0 disables

|

||||

#max_slot_wal_keep_size = -1 # in megabytes; -1 disables

|

||||

#wal_sender_timeout = 60s # in milliseconds; 0 disables

|

||||

|

||||

#max_replication_slots = 10 # max number of replication slots

|

||||

# (change requires restart)

|

||||

#track_commit_timestamp = off # collect timestamp of transaction commit

|

||||

# (change requires restart)

|

||||

|

||||

# - Master Server -

|

||||

|

||||

# These settings are ignored on a standby server.

|

||||

|

||||

#synchronous_standby_names = '' # standby servers that provide sync rep

|

||||

# method to choose sync standbys, number of sync standbys,

|

||||

# and comma-separated list of application_name

|

||||

# from standby(s); '*' = all

|

||||

#vacuum_defer_cleanup_age = 0 # number of xacts by which cleanup is delayed

|

||||

|

||||

# - Standby Servers -

|

||||

|

||||

# These settings are ignored on a master server.

|

||||

|

||||

#primary_conninfo = '' # connection string to sending server

|

||||

#primary_slot_name = '' # replication slot on sending server

|

||||

#promote_trigger_file = '' # file name whose presence ends recovery

|

||||

#hot_standby = on # "off" disallows queries during recovery

|

||||

# (change requires restart)

|

||||

#max_standby_archive_delay = 30s # max delay before canceling queries

|

||||

# when reading WAL from archive;

|

||||

# -1 allows indefinite delay

|

||||

#max_standby_streaming_delay = 30s # max delay before canceling queries

|

||||

# when reading streaming WAL;

|

||||

# -1 allows indefinite delay

|

||||

#wal_receiver_create_temp_slot = off # create temp slot if primary_slot_name

|

||||

# is not set

|

||||

#wal_receiver_status_interval = 10s # send replies at least this often

|

||||

# 0 disables

|

||||

#hot_standby_feedback = off # send info from standby to prevent

|

||||

# query conflicts

|

||||

#wal_receiver_timeout = 60s # time that receiver waits for

|

||||

# communication from master

|

||||

# in milliseconds; 0 disables

|

||||

#wal_retrieve_retry_interval = 5s # time to wait before retrying to

|

||||

# retrieve WAL after a failed attempt

|

||||

#recovery_min_apply_delay = 0 # minimum delay for applying changes during recovery

|

||||

|

||||

# - Subscribers -

|

||||

|

||||

# These settings are ignored on a publisher.

|

||||

|

||||

#max_logical_replication_workers = 4 # taken from max_worker_processes

|

||||

# (change requires restart)

|

||||

#max_sync_workers_per_subscription = 2 # taken from max_logical_replication_workers

|

||||

|

||||

|

||||

#------------------------------------------------------------------------------

|

||||

# QUERY TUNING

|

||||

#------------------------------------------------------------------------------

|

||||

|

||||

# - Planner Method Configuration -

|

||||

|

||||

#enable_bitmapscan = on

|

||||

#enable_hashagg = on

|

||||

#enable_hashjoin = on

|

||||

#enable_indexscan = on

|

||||

#enable_indexonlyscan = on

|

||||

#enable_material = on

|

||||

#enable_mergejoin = on

|

||||

#enable_nestloop = on

|

||||

#enable_parallel_append = on

|

||||

#enable_seqscan = on

|

||||

#enable_sort = on

|

||||

#enable_incremental_sort = on

|

||||

#enable_tidscan = on

|

||||

#enable_partitionwise_join = off

|

||||

#enable_partitionwise_aggregate = off

|

||||

#enable_parallel_hash = on

|

||||

#enable_partition_pruning = on

|

||||

|

||||

# - Planner Cost Constants -

|

||||

|

||||

#seq_page_cost = 1.0 # measured on an arbitrary scale

|

||||

#random_page_cost = 4.0 # same scale as above

|

||||

#cpu_tuple_cost = 0.01 # same scale as above

|

||||

#cpu_index_tuple_cost = 0.005 # same scale as above

|

||||

#cpu_operator_cost = 0.0025 # same scale as above

|

||||

#parallel_tuple_cost = 0.1 # same scale as above

|

||||

#parallel_setup_cost = 1000.0 # same scale as above

|

||||

|

||||

#jit_above_cost = 100000 # perform JIT compilation if available

|

||||

# and query more expensive than this;

|

||||

# -1 disables

|

||||

#jit_inline_above_cost = 500000 # inline small functions if query is

|

||||

# more expensive than this; -1 disables

|

||||

#jit_optimize_above_cost = 500000 # use expensive JIT optimizations if

|

||||

# query is more expensive than this;

|

||||

# -1 disables

|

||||

|

||||

#min_parallel_table_scan_size = 8MB

|

||||

#min_parallel_index_scan_size = 512kB

|

||||

#effective_cache_size = 4GB

|

||||

|

||||

# - Genetic Query Optimizer -

|

||||

|

||||

#geqo = on

|

||||

#geqo_threshold = 12

|

||||

#geqo_effort = 5 # range 1-10

|

||||

#geqo_pool_size = 0 # selects default based on effort

|

||||

#geqo_generations = 0 # selects default based on effort

|

||||

#geqo_selection_bias = 2.0 # range 1.5-2.0

|

||||

#geqo_seed = 0.0 # range 0.0-1.0

|

||||

|

||||

# - Other Planner Options -

|

||||

|

||||

#default_statistics_target = 100 # range 1-10000

|

||||

#constraint_exclusion = partition # on, off, or partition

|

||||

#cursor_tuple_fraction = 0.1 # range 0.0-1.0

|

||||

#from_collapse_limit = 8

|

||||

#join_collapse_limit = 8 # 1 disables collapsing of explicit

|

||||

# JOIN clauses

|

||||

#force_parallel_mode = off

|

||||

#jit = on # allow JIT compilation

|

||||

#plan_cache_mode = auto # auto, force_generic_plan or

|

||||

# force_custom_plan

|

||||

|

||||

|

||||

#------------------------------------------------------------------------------

|

||||

# REPORTING AND LOGGING

|

||||

#------------------------------------------------------------------------------

|

||||

|

||||

# - Where to Log -

|

||||

|

||||

#log_destination = 'stderr' # Valid values are combinations of

|

||||

# stderr, csvlog, syslog, and eventlog,

|

||||

# depending on platform. csvlog

|

||||

# requires logging_collector to be on.

|

||||

|

||||

# This is used when logging to stderr:

|

||||

#logging_collector = off # Enable capturing of stderr and csvlog

|

||||

# into log files. Required to be on for

|

||||

# csvlogs.

|

||||

# (change requires restart)

|

||||

|

||||

# These are only used if logging_collector is on:

|

||||

#log_directory = 'log' # directory where log files are written,

|

||||

# can be absolute or relative to PGDATA

|

||||

#log_filename = 'postgresql-%Y-%m-%d_%H%M%S.log' # log file name pattern,

|

||||

# can include strftime() escapes

|

||||

#log_file_mode = 0600 # creation mode for log files,

|

||||

# begin with 0 to use octal notation

|

||||

#log_truncate_on_rotation = off # If on, an existing log file with the

|

||||

# same name as the new log file will be

|

||||

# truncated rather than appended to.

|

||||

# But such truncation only occurs on

|

||||

# time-driven rotation, not on restarts

|

||||

# or size-driven rotation. Default is

|

||||

# off, meaning append to existing files

|

||||

# in all cases.

|

||||

#log_rotation_age = 1d # Automatic rotation of logfiles will

|

||||

# happen after that time. 0 disables.

|

||||

#log_rotation_size = 10MB # Automatic rotation of logfiles will

|

||||

# happen after that much log output.

|

||||

# 0 disables.

|

||||

|

||||

# These are relevant when logging to syslog:

|

||||

#syslog_facility = 'LOCAL0'

|

||||

#syslog_ident = 'postgres'

|

||||

#syslog_sequence_numbers = on

|

||||

#syslog_split_messages = on

|

||||

|

||||

# This is only relevant when logging to eventlog (win32):

|

||||

# (change requires restart)

|

||||

#event_source = 'PostgreSQL'

|

||||

|

||||

# - When to Log -

|

||||

|

||||

#log_min_messages = warning # values in order of decreasing detail:

|

||||

# debug5

|

||||

# debug4

|

||||

# debug3

|

||||

# debug2

|

||||

# debug1

|

||||

# info

|

||||

# notice

|

||||

# warning

|

||||

# error

|

||||

# log

|

||||

# fatal

|

||||

# panic

|

||||

|

||||

#log_min_error_statement = error # values in order of decreasing detail:

|

||||

# debug5

|

||||

# debug4

|

||||

# debug3

|

||||

# debug2

|

||||

# debug1

|

||||

# info

|

||||

# notice

|

||||

# warning

|

||||

# error

|

||||

# log

|

||||

# fatal

|

||||

# panic (effectively off)

|

||||

|

||||

#log_min_duration_statement = -1 # -1 is disabled, 0 logs all statements

|

||||

# and their durations, > 0 logs only

|

||||

# statements running at least this number

|

||||

# of milliseconds

|

||||

|

||||

#log_min_duration_sample = -1 # -1 is disabled, 0 logs a sample of statements

|

||||

# and their durations, > 0 logs only a sample of

|

||||

# statements running at least this number

|

||||

# of milliseconds;

|

||||

# sample fraction is determined by log_statement_sample_rate

|

||||

|

||||

#log_statement_sample_rate = 1.0 # fraction of logged statements exceeding

|

||||

# log_min_duration_sample to be logged;

|

||||

# 1.0 logs all such statements, 0.0 never logs

|

||||

|

||||

|

||||

#log_transaction_sample_rate = 0.0 # fraction of transactions whose statements

|

||||

# are logged regardless of their duration; 1.0 logs all

|

||||

# statements from all transactions, 0.0 never logs

|

||||

|

||||

# - What to Log -

|

||||

|

||||

#debug_print_parse = off

|

||||

#debug_print_rewritten = off

|

||||

#debug_print_plan = off

|

||||

#debug_pretty_print = on

|

||||

#log_checkpoints = off

|

||||

#log_connections = off

|

||||

#log_disconnections = off

|

||||

#log_duration = off

|

||||

#log_error_verbosity = default # terse, default, or verbose messages

|

||||

#log_hostname = off

|

||||

log_line_prefix = '%m [%p] %q%u@%d ' # special values:

|

||||

# %a = application name

|

||||

# %u = user name

|

||||

# %d = database name

|

||||

# %r = remote host and port

|

||||

# %h = remote host

|

||||

# %b = backend type

|

||||

# %p = process ID

|

||||

# %t = timestamp without milliseconds

|

||||

# %m = timestamp with milliseconds

|

||||

# %n = timestamp with milliseconds (as a Unix epoch)

|

||||

# %i = command tag

|

||||

# %e = SQL state

|

||||

# %c = session ID

|

||||

# %l = session line number

|

||||

# %s = session start timestamp

|

||||

# %v = virtual transaction ID

|

||||

# %x = transaction ID (0 if none)

|

||||

# %q = stop here in non-session

|

||||

# processes

|

||||

# %% = '%'

|

||||

# e.g. '<%u%%%d> '

|

||||

#log_lock_waits = off # log lock waits >= deadlock_timeout

|

||||

#log_parameter_max_length = -1 # when logging statements, limit logged

|

||||

# bind-parameter values to N bytes;

|

||||

# -1 means print in full, 0 disables

|

||||

#log_parameter_max_length_on_error = 0 # when logging an error, limit logged

|

||||

# bind-parameter values to N bytes;

|

||||

# -1 means print in full, 0 disables

|

||||

#log_statement = 'none' # none, ddl, mod, all

|

||||

#log_replication_commands = off

|

||||

#log_temp_files = -1 # log temporary files equal or larger

|

||||

# than the specified size in kilobytes;

|

||||

# -1 disables, 0 logs all temp files

|

||||

log_timezone = 'Etc/UTC'

|

||||

|

||||

#------------------------------------------------------------------------------

|

||||

# PROCESS TITLE

|

||||

#------------------------------------------------------------------------------

|

||||

|

||||

cluster_name = '13/main' # added to process titles if nonempty

|

||||

# (change requires restart)

|

||||

#update_process_title = on

|

||||

|

||||

|

||||

#------------------------------------------------------------------------------

|

||||

# STATISTICS

|

||||

#------------------------------------------------------------------------------

|

||||

|

||||

# - Query and Index Statistics Collector -

|

||||

|

||||

#track_activities = on

|

||||

#track_counts = on

|

||||

#track_io_timing = off

|

||||

#track_functions = none # none, pl, all

|

||||

#track_activity_query_size = 1024 # (change requires restart)

|

||||

stats_temp_directory = '/var/run/postgresql/13-main.pg_stat_tmp'

|

||||

|

||||

|

||||

# - Monitoring -

|

||||

|

||||

#log_parser_stats = off

|

||||

#log_planner_stats = off

|

||||

#log_executor_stats = off

|

||||

#log_statement_stats = off

|

||||

|

||||

|

||||

#------------------------------------------------------------------------------

|

||||